I newly discovered this amazing forum and a quick search showed me that there is only one mention of MusiKraken so far. As the app is all about musical expression (including poly expression), I thought a quick introduction might be useful.

MusiKraken is an iOS and Android app (and currently also has a web version). It is basically a modular expressive MIDI controller that allows you to (mis-)use all sensors of your mobile device, tablet or computer as a building block for your own custom MIDI controller setup.

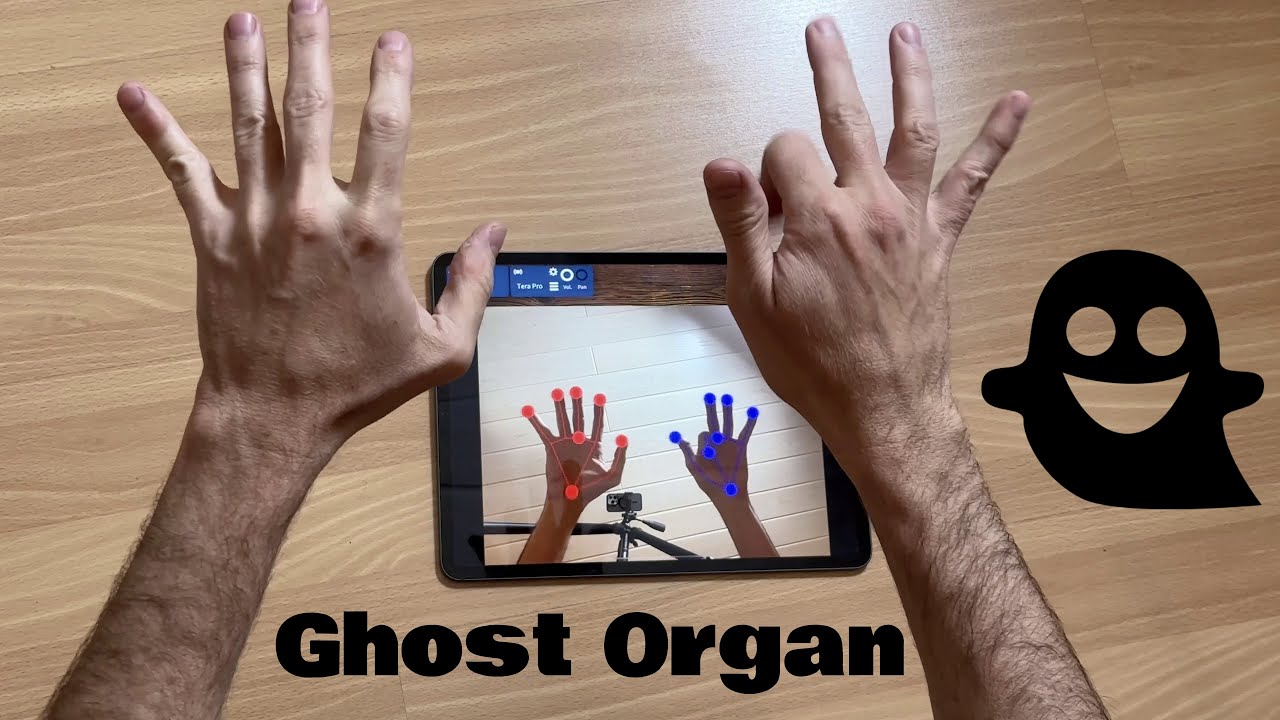

So for example it supports multi-touch, camera (hand tracking including depth sensing on iOS devices with a TrueDepth/LiDAR sensor, body pose tracking, face-tracking, color tracking and so on), motion sensors, microphone, game controllers, Apple Watch, motion sensors in AirPods… The long-term goal is to have the app with the most human-computer-interaction types on the app store (it already might be, but just to make sure, I will add more…).

The app supports all kinds of MIDI transports: Inter-app MIDI, MIDI via USB (if available by your device), MIDI via WiFi, MIDI via Bluetooth (including my own implementation of MIDI via WiFi and Bluetooth for Android for devices that do not officially support it). The iOS app can also host Audio Units (AuV3) and send raw data from the sensors via OSC. And it officially supports MIDI 2.0 (as a corporate member of the MIDI Association I even signed the licensing contract for the MIDI 2.0 logo. Which I haven’t even used yet.)

And the app has many MIDI effects that can be combined with the sensors and the outputs to create whatever expressive MIDI controller you want.

For poly expression, the keyboard module in the app supports MPE. So you can slide on the keys and use touch radius or pressure (when supported by the device) to control the different MPE parameters. But you can also combine the keyboard with a Chord Splitter module to split chords into separate voices and send each one to a separate MIDI channel. This way you kind of can create your own MPE-like instrument by controlling all kinds of parameters (Control Change, Channel Pressure, Pitch Bend etc.) separately per key.

And if you enable MIDI 2.0 in the settings (and find a synth that actually supports it, which is still very very rare), you can also already send MIDI 2.0 registered and assignable per-note controller and per-note pitch bend events (I haven’t found any other MIDI controller yet that supports this already. But as long as there are no synth that supports it, it is also kind of useless ![]() ).

).

Here is a quick overview of what it can do: https://www.youtube.com/watch?v=_WHd4yWv418