as a developer, Id say testing against midi files wont gain you very much…

you know the mpe spec,

really if you are supporting pitchbend, cc74 and ch pressure, per note (on ch 2-16), then there is not much too it - to test that you could ‘drive’ your plugin with any DAW that supports MPE,

the only other thing to consider is these 3 messages are coming in quite a bit faster/frequent than normal midi, it does depend on surface, but upwards of 50 msg/second is not unusual.

… but on a modern computesr, Ive never had an issue with this.

(k, if you were trying to alter FFT parameters real time - perhaps  )

)

past that the feel of the sound engine will very much differ depending on the control surface and the ‘preset’ you are using - thats why I midi file wont help you much…

the ‘issue’ is, when you play an mpe controller, your expression is in a feedback loop with the sound coming back… (this is kind of the point of expressive controllers ;))

SO… if even on the same surface, if you play a flute-like preset vs key-like preset, the midi you generate for expression will look very different.

(and of course, depending on the piece of music your playing, you may have more or less expression).

also different surface react so differently… a preset on your plugin that works great on a continuum, might not feel/sound that great on an Erae Touch or Linnstrument.

this is probably also why you cannot find many midi files… they are just not that useful, since they are so dependent on patches, hence why many of us never record mpe midi , rather the audio.

honestly, if you want to ‘test’ it, its probably best to release a demo/beta version to get some feedback.

but again, be aware, someone playing it with an Osmose will have a very different experience to someone using a Roli or a continuum…

bonus tip:

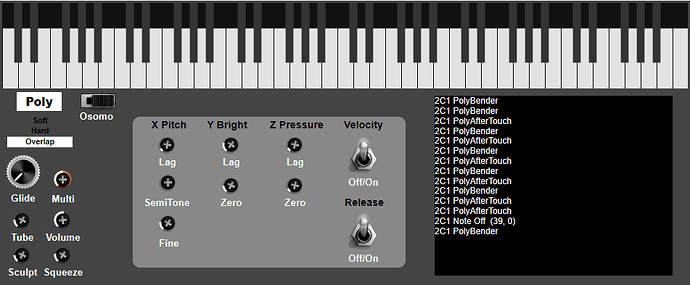

be aware that the Osmose is very different to others, due to the y axis not being independent of Z…

also by its nature, on the Osmose Y is always unipolar, whereas Y can often be treated as bi-polar on other controllers (were 64 is 0).

technically, its not any different to developers, its all just cc74, however, it will influence presets a lot.